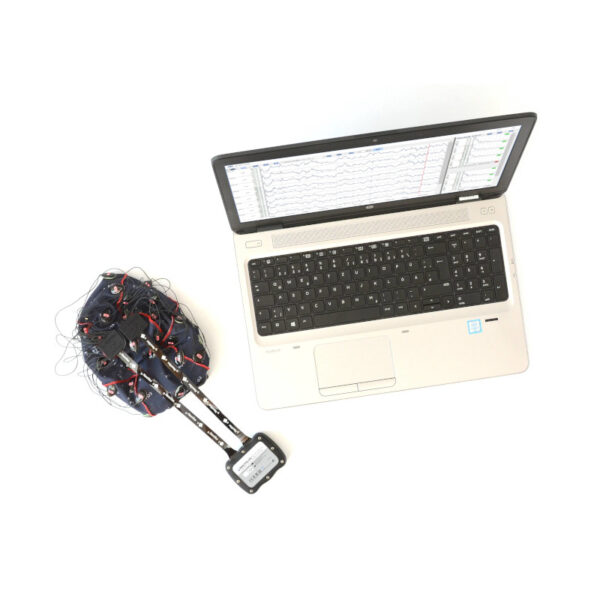

The g.BCIsys is a brain-computer interface (BCI) that provides a communication channel between the human brain and a computer. Mental activity involves electrical activity, and these electrophysiological signals can be detected with techniques like the Electroencephalogram (EEG) or Electrocorticogram (ECoG). The BCI system detects these changes and transforms them into control signals, which can be used for moving objects, writing letters, opening doors, changing TV channels, control devices and other everyday household activities. This helps people with limited mobility increase their independence and enables completely paralyzed patients who suffer from disorders of consciousness (DOC), severe brain injuries, or locked-in syndrome to communicate with their environments. Furthermore, BCI technology can be used as a tool to assess the remaining cognitive functions of these patients. BCI’s are also used for motor rehabilitation after stroke and brain mapping procedures.

g.tec’s BCI system includes all hard- and software components needed for biosignal acquisition, real-time and offline biosignal analysis, data classification and neurofeedback. A BCI system can be built with g.USBamp, g.HIamp or g.Nautilus. Together with g.HIsys, you will have a powerful software package to read the biosignal data directly into Simulink. Simulink blocks are used to visualize and store the data. The parameter extraction and classification can be performed with standard Simulink blocks, the g.HIsys library or selfwritten S-functions. After EEG data acquisition, the data can be analyzed with g.BSanalyze, the EEG, ECoG, CCEP and classification toolboxes. By using BCI sample applications, you can develop state-of-the-art BCI experiments within a few hours.

| Complete BCI research system for EEG and ECoG |

| Ready to go paradigms for spelling, robot and cursor control |

| Seamless integration of real-time experiments and off-line analysis |

| Open source paradigms let you make adaptations and develop applications easily |

| BCI technology proven across hundreds of subjects and labs |

| Recommended setup for a fully equipped BCI lab plan available |

| MATLAB/Simulink Rapid Prototyping environment speeds up development times from months to days |

| Runs with g.USBamp, g.HIamp or g.Nautilus technology |

| Zero class enabled for SSVEP, P300 and motor imagery |

| The only environment that supports all BCI approaches (P300, SSVEP/SSSEP, Motor Imagery, cVEP slow waves) |

| Integrates invasive and non-invasive stimulation for closed-loop experiments |

| Multi-device feature to record from multiple subjects or different g.tec biosignal amplifiers |

| Motor Imagery Common Spatial Patterns |

| Motor Imagery Common Spatial Patterns with FES |

| Code-based BCI |

| SSVEP |

| Hybrid |

| Ping Pong |

| EMG and EOG Control |

| Fusiform Face Area |

| Hyperscanning |

| Virtual Reality and Augmented Reality (P300 mastermind, cVEP space traveller, SSVEP smart home) |

| Visual P300 |

| Vibro-tactile P300 |

| Functional Near Infrared Spectroscopy (fNIRS) |

MOTOR IMAGERY

One of the most common types of Brain-Computer Interface (BCI) systems relies on motor imagery (MI). The user is asked to imagine moving either the right or left hand. This produces specific patterns of brain activity in the EEG signal, which an artificial classifier can interpret to detect which hand the user imagined moving. This approach has been used for a wide variety of communication and control purposes, such as spelling, navigation through a virtual environment, or controlling a cursor, wheelchair, orthosis, or prosthesis.

MOTOR REHABILITATION WITH ROBOTIC DEVICES

Training with motor imagery (MI) is known to be an effective therapy in stroke rehabilitation, even if no feedback about the performance is given to the user. Providing additional real-time feedback can encourage Hebbian learning, which increases cortical plasticity, and could improve functional recovery. The MI based Brain-Computer Interface (BCI) can be linked to a rehabilitation robot or exoskeleton. When the user imagines a certain type of movement, the BCI system can detect this movement imagery and trigger devices to provide feedback.

P300 EVENT-RELATED POTENTIAL

The P300 is another type of brain activity that can be detected with the EEG. The P300 is a brainwave component that occurs after a stimulus that is both important and relatively rare. In the EEG signal, the P300 appears as a positive wave 300 ms after stimulus onset. The electrodes are placed over the posterior and occipital areas, where the P300 is most prominent.

MOTOR IMAGERY BASED PING PONG GAME

When playing Ping Pong, two persons are connected to the BCI system and can control the paddle with motor imagery. The paddle moves upwards via left hand movement imagination and downwards via right hand movement imagination. The algorithm extracts EEG bandpower features in the alpha and beta ranges of two EEG channels per person. Therefore, in total, 4 EEG channels are analyzed and classified.

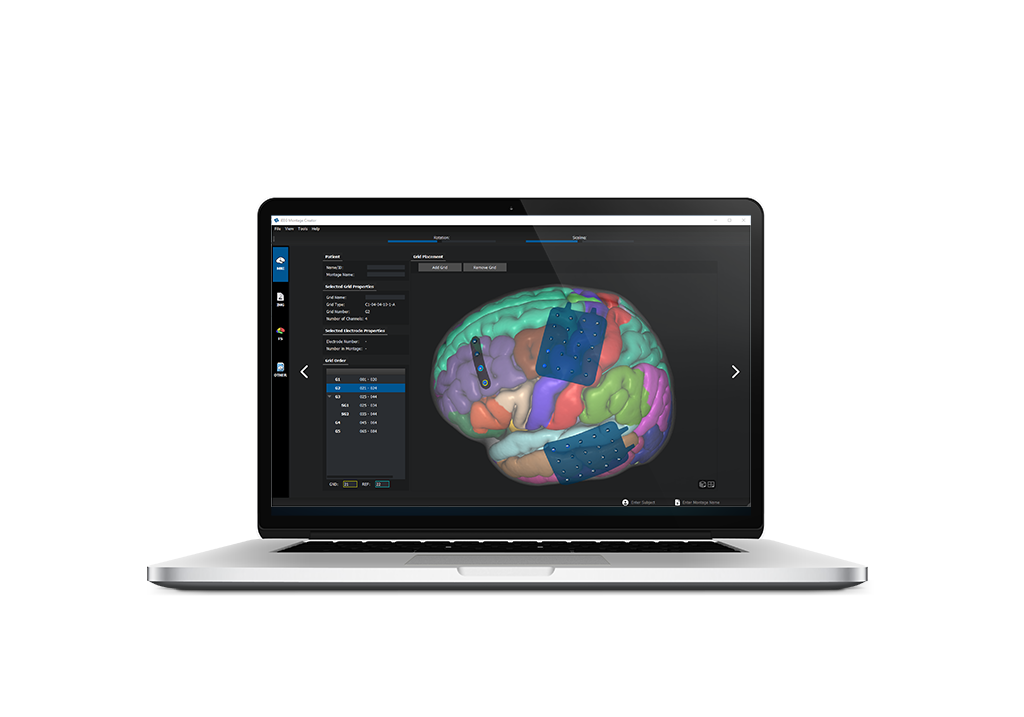

HIGH-GAMMA MAPPING AND CONTROL

While most BCIs rely on the EEG, some of the latest work has drawn attention to BCIs based on ECoG. Recent research has demonstrated, over and over, that ECoG can outperform comparable EEG methods because of these advantages. ECoG based systems have numerous advantages over EEG systems, including:

- higher spatial resolution

- higher frequency range

- fewer artifacts

- no need to apply gel before use

ECoG methods can improve BCIs and help addressing fundamental questions in neuroscience. A few efforts have sought to map “eloquent cortex” with ECoG. That is, scientists have studied language areas of the brain while people say different words or phonemes. Results revealed far more information than EEG based methods and have inspired new ECoG BCIs that are impossible with EEG BCIs. Other work explored the brain activity associated with movement.

This has been very well studied with the EEG, leading to the well-known dominant paradigm that real and imagined movement affects activity in the 8-12 Hz range. ECoG research showed that this is only part of the picture. Movement also affects a higher frequency band, around 70-200 Hz, which cannot be detected with scalp EEG. This higher frequency band is more focal and could lead to more precise and accurate BCIs than EEG methods could ever deliver.

“We have several ongoing studies. One of these studies is to extend human arm control to new approaches that control more than two arms with the help of brain-computer interfaces.”

Hiroshi Ishiguro, PhD - Intelligent Robotics Laboratory, Japan

“The g.tec brain-computer interface environment allows my lab to rapidly realize new applications.”

Nima Mesgarani, PhD - Columbia University, New York, USA

“g.BCIsys allows my lab to run many different EEG and ECoG studies for BCI control and passive functional mapping of epilepsy and tumor patients. The integration with intracranial electrical stimulation is especially important for me.”

Kyousuke Kamada, MD, PhD - Mengumino Hospital, Hokkaido, Japan

“Fused with a variety of rapid prototyping and research software tools, g.HIamp serves as a unique tool in our clinical research applications to record electrocorticogram and local field potentials. The oversampling process executed by the internal DSP provides exceptional SNR and enables capturing higher frequency brain rhythms with superior quality.”

Nuri Firat Ince, PhD - University of Houston, USA

“We will witness more and more mixed fNIRS and EEG BCIs entering into the human augmentation arena, as more and more machine learning and AI will be coming into our lives.”

Tomasz Rutowski, PhD - Research Scientist at RIKEN AIP, Japan

“g.Nautilus wireless EEG amplifiers allow us to investigate freezing of gait in Parkinson’s disease, a dangerous symptom for the aging population as it can lead to falls. We can synchronize the EEG signals with VICON motion capture data seamlessly, without adding extra burden on our patients such as backpacks, or wired in hardware following them.”

Aysegul Gunduz, PhD - University of Florida, USA

“With g.USBamp you don’t think about the amplifier anymore… You think about the EEG signals!”

Ales Holobar, PhD - University of Maribor, SloveniaSMART HOME CONTROL

The BCI is connected to a Virtual Reality (VR) system that presents a virtual 3D representation of the smart home with different control elements (TV, music, windows, heating system, phone). This allows the subjects to move through the environment. Users can perform tasks like playing music, watching TV, opening doors, or moving around. Therefore, seven control masks are available: a light mask, a music mask, a phone mask, a temperature mask, a TV mask, a move mask and a “go to” mask.

P300 SPELLING

The P300 paradigm presents e.g. 36 letters in a 6 x 6 matrix on the computer monitor. Each letter (or row or column of letters) flashes in a random order, and the subject has to silently count each flash that includes the letter that he or she wants to communicate. As soon as the corresponding letter flashes, a P300 component is produced inside the brain. The algorithms analyze the EEG data and select the letter with the highest P300 component.

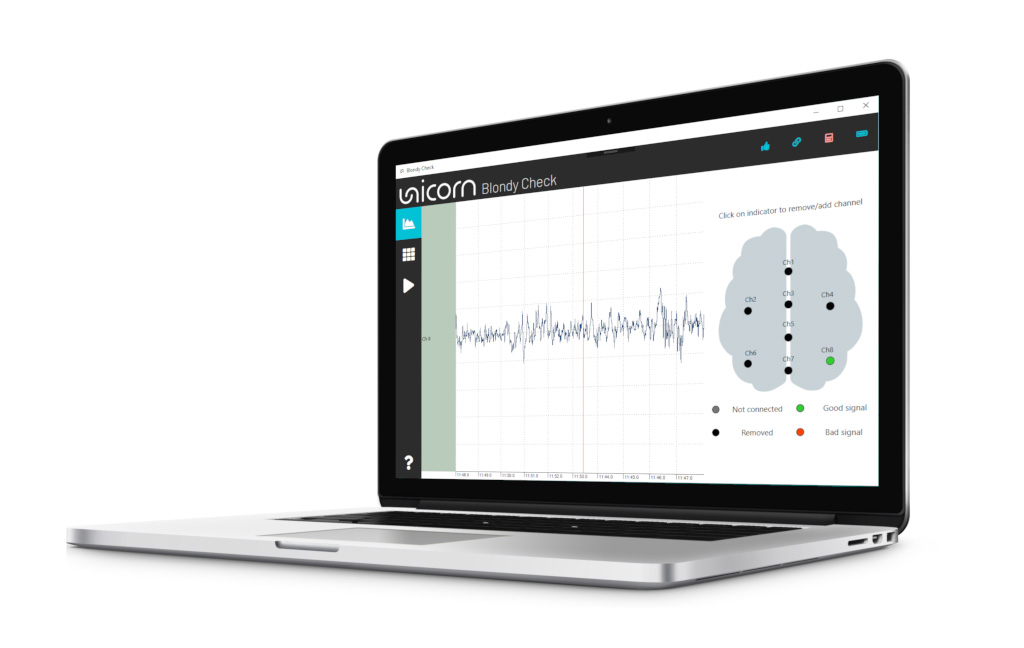

The P300 speller is available as Simulink model that allows you to modify the system and as ready-to-go application for patients’ usage (Unicorn Suite Hybrid Black).

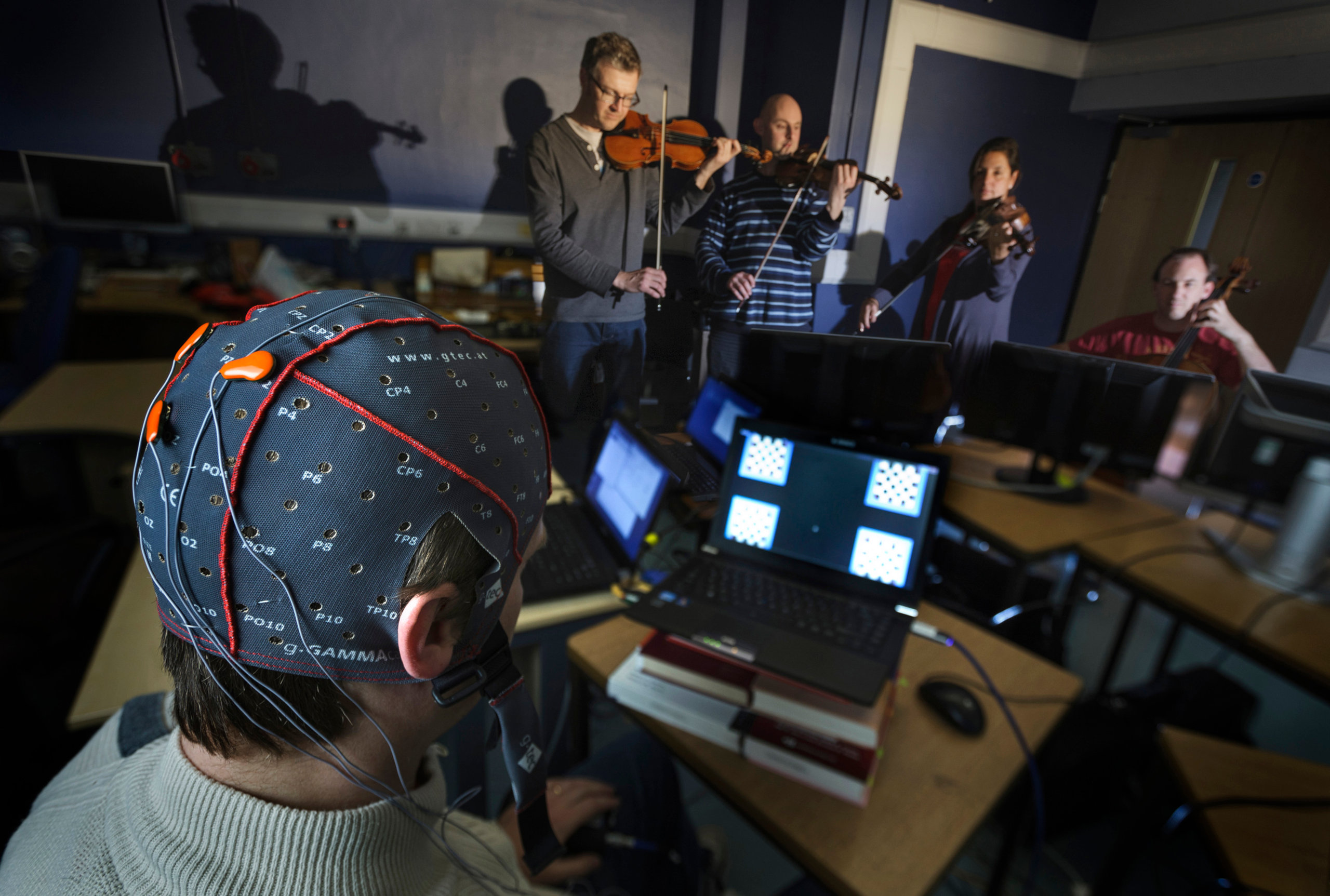

HYPERSCANNING - CONNECTING MINDS

The P300 speller was used for a demonstration called “Hyperscanning” that represents an important step toward direct cooperation between people through their minds. By combining the brainwave signals across many people, the system manages to substantially improve communication speed and accuracy. This approach could be used for cooperative control for many different applications. People might work together to play games or draw paintings, or could work together for other tasks like making music, voting or otherwise making decisions, or solving problems. Someday, users might put their heads together for the most direct “meeting of the minds” ever.

MUSIC AND THE MIND

The LIVELAB at McMaster Institute for Music and the Mind (MIMM) is engaged in neuroscientific research that aims to understand the positive role of music training, movement and performance. Researchers study music in a live setting using g.Nautilus to learn how performers interact, how audiences move during a performance and the social and emotional impact of these experiences.

CODE-BASED BCI

Pseudo-random stimulation sequences on a screen (code-based BCI) can be used to control a robotic device. The amplifier sends the EEG data to the BCI system that allows the subject to control a robotic device in real-time. The code-based BCI system can reach a very high online accuracy, which is promising for real-time control applications that require a continuous control signal. The code-based BCI principle is available in g.HIsys as an add-on toolbox cVEP. The toolbox can analyze EEG data in real-time and provide code-flickering icons to remote screens via the SOCI module. This allows you to integrate control icons in external applications that are e.g. programmed in Unity.

SSVEP

Steady state visual evoked potentials (SSVEP)-based BCIs use several stationary oscillating light sources (e.g. flickering LEDs, or phase-reversing checkerboards), each of which oscillates at one unique frequency. When a person gazes at one of these lights, or even focuses attention on it (for example, the light that is assigned to the “move forward” command), then the EEG activity over the occipital lobe will show an increase in power at the corresponding frequency.

g.tec’s algorithms determine which EEG frequency component(s) are higher than normal, which reveals which light the user was observing and thus which movement command the user wanted to send. This system includes a “no-control” state as well. When the user does not look at any oscillating light, the robot doesn’t move.

AUDITORY AND VIBRO-TACTILE STIMULATION

P300 BCIs based on visual stimuli do not work with patients who lost their vision. Therefore, auditory paradigms can be implemented using a frequent stimulus with a certain frequency and an infrequent stimulus with another frequency. The user is asked to count how many times the infrequent stimulus occurs. Like with the visual P300 speller, the infrequent stimuli also produce a P300 response in the EEG. The same principle can be used for vibrotactile stimulation if e.g. the right hand is frequently stimulated and the left hand is infrequently stimulated. The EEG will exhibit a P300 if the user is paying attention to the infrequent stimuli. This auditory and vibrotactile setup can assess whether the patient is able to follow instructions and experimental procedures.

AVATAR CONTROL

Avatar control has been developed through the research project VERE (Virtual Embodiment and Robotic Re-Embodiment). The VERE project is concerned with embodiment of people in surrogate bodies so that they have the illusion that the surrogate body is their own body – and that they can move and control it as if it were their own. There are two types of embodiment:

- robotic embodiment

- virtual embodiment

In the first type, the person is embodied in a remote physical robotic device, which they control through a BCI. For example, a patient confined to a wheelchair or bed, who is unable to physically move, may nevertheless re-enter the world actively and physically through such remote embodiment. In the second type, the VERE project used the intendiX ACTOR protocol to access the BCI output from within Unity to control both the virtual and robotic avatars.

The BCI is part of the intention recognition and inference component of the embodiment station. The intention recognition and inference unit takes inputs from fMRI, EEG and other physiological sensors to create a control signal together with access to a knowledge base, taking into account body movements and facial movements. This output is used to control the virtual representation of the avatar in Unity and to control the robotic avatar. The user gets feedback showing the scene and the BCI control via the HMD or a display. The BCI overlay, for example, allows users to embed the BCI stimuli and feedback within video streams recorded by the robot and the virtual environment of the user’s avatar. The user is situated inside the embodiment station, which also provides different stimuli such as visual, auditory and tactile.

The setup can also be used for invasive recordings with the Electrocorticogram (ECoG). The avatar control is promising from a market perspective because it could be used in rehabilitation systems, such as for motor imagery with stroke patients. To run these experiments, g.HIsys and Unity are required.

SOCI PROTOCOL

The SOCI module (Screen Overlay Control Interface module) is especially useful for Virtual Reality (VR) applications and similar applications where merging BCI controls with the application’s native interface is essential for an improved and optimal user experience. Using SOCI, the platform can be configured to remotely display its stimuli and feedback on various different devices and systems. The SOCI can be embedded in host applications to directly interact with BCI controls inside the displayed scene. It generates CVEP, SSVEP stimuli and supports single symbol, row column and random patterns for P300 stimulation.

CCEP

To record CCEPs, subdural ECoG grids are implanted directly on the cortex on the dominant hemisphere. Then, conventional electrical cortical stimulation mapping is used to identify e.g. Broca’s area. Next, a bipolar stimulation is performed on Broca’s area, which elicits CCEPs over the motor cortex and over the auditory cortex. Electrode channels showing an EP over the motor cortex indicate the mouth region required to say words and sentences. EPs over the auditory cortex indicate electrode positions representing the regions responsible for hearing and for understanding e.g. questions (Wernicke’s area, receptive language area). Overall, the CCEP procedures allows doctors to rapidly map out a whole functional cortical network, which provides important information for neuro-surgical and BCI applications.

HYBRID BCI

Hybrid BCIs combine different input signals to provide more flexible and effective control. g.HIsys supports (i) mouse control, (ii) EMG 1D and 2D control, (iii) EOG 1D control and (iv) eye-tracker control, as well as the standard BCI signals.

EMG and EOG are recorded via the biosignal amplifier and are analyzed with g.HIsys to generate the control signals, while the mouse and the eye-tracker use external devices that are interfaced with g.HIsys. The combination of these input signals can make BCIs faster, more accurate, and more flexible. Hybrid BCIs have been gaining attention in the research literature recently, and there remain many opportunities for interesting hybrid BCI studies.

EMG and EOG CONTROL

The EMG and EOG control provides a set of BCI type models that uses eye motion (EOG) signals or muscular contraction (EMG) signals to select individual symbols initiate commands and control external devices.

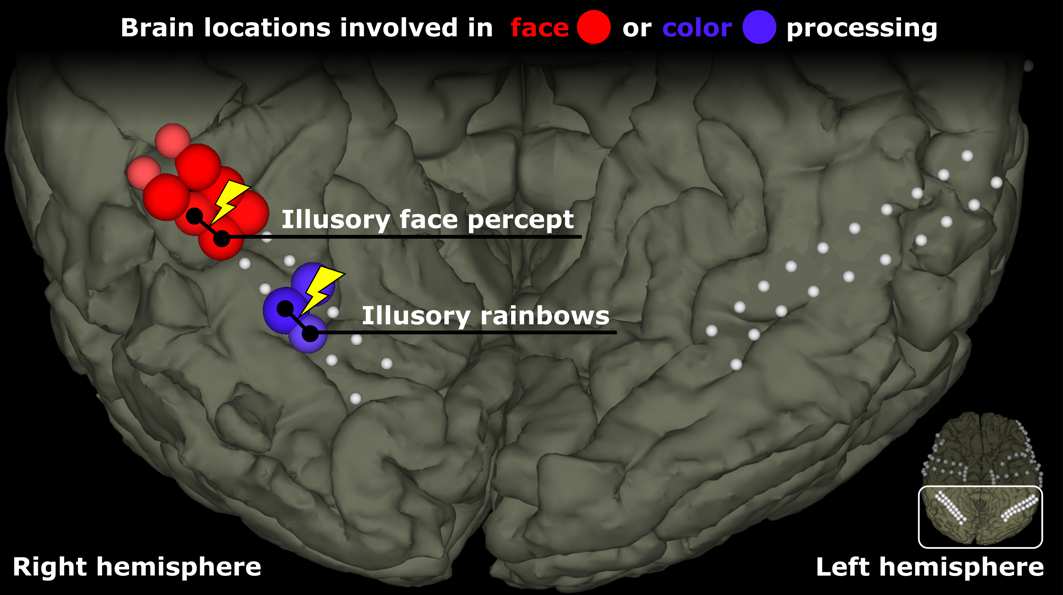

REAL-TIME FACE DECODER WITH ECOG

High-gamma activity allows doctors to map the temporal base of the cortex. This is useful to find the fusiform face area, an area responsible to identifying faces, and nearby regions responsible for coding colors, shapes, characters, etc.

In this video, Dr. Ogawa (Asahikawa Medical University Japan) shows different faces, Kanji characters and Arabic characters to the patient with the ECoG implant (high-resolution ECoG on the temporal base on the left and right hemispheres). The patient just observes the images, and the BCI system decodes high-gamma activity from the ECoG electrodes. The BCI is thereby able to identify which image the patient is seeing in real-time. This worked whether the patient saw other people’s real faces or his own face in the mirror.

FUSIFORM FACE AREA

High-gamma activity allows doctors to map the temporal base of the cortex. This is useful to find the fusiform face area, an area responsible to identifying faces, and nearby regions responsible for coding colors, shapes, characters, etc. This region can also be used for real-time decoding. In this example, Dr. Ogawa (Asahikawa Medical University Japan) shows different faces, Kanji characters and Arabic characters to the patient with the ECoG implant (high-resolution ECoG on the temporal base on the left and right hemispheres).

The patient just observes the images, and the BCI system decodes high-gamma activity from the ECoG electrodes. The BCI is thereby able to identify which image the patient is seeing in real-time. This worked whether the patient saw other people’s real faces or his own face in the mirror.

OVERVIEW OF BRAIN-COMPUTER INTERFACES & NEUROTECHNOLOGIES

This talk by Dr. Christoph Guger (CEO of g.tec medical engineering) was recorded during the BCI & Neurotech Spring School 2024, the biggest BCI meeting worlwide. Dive into current and future applications of brain-computer interfaces, the history of brain-computer interfaces, non-invasive and invasive applications for rehabilitation, brain assessment and brain mapping.

MOTOR IMAGERY AND STROOP TEST WITH EEG AND FNIRS

The fNIRS toolbox contains experiments to run motor imagery BCI experiments and a Stroop test. The Simulink model for the motor imagery BCI contains all necessary data acquisition blocks for the EEG and fNIRS recorded from the sensorimotor cortex and contains all necessary pre-processing, feature-extraction, classification and experimental control blocks to run a real-time experiment. The experiment is seamlessly integrated in g.BSanalyze to calculate a classifier for each user and afterwards to run the BCI in real-time.

The Stroop experiment contains a Paradigm Presenter block that runs the Stroop test while the EEG and fNIRS are recorded from the pre-frontal cortex. Both, the EEG and fNIRS can be classified and processed in g.BSanalyze to understand the cortical processing of the subject.

BUY YOURS

NEED MORE INFORMATION

ABOUT THIS PRODUCT?

Send us your email so we can contact you as soon as possible.