- News

- 3rd Place Winner of the BCI Award 2018: Brain-To-Speech

3rd Place Winner of the BCI Award 2018: Brain-To-Speech

To investigate and decode articulatory speech production, Christian Herff and his team used Electrocorticography (ECoG) and presented the first direct synthesis of comprehensible acoustics only from areas involved in speech production. This is especially important as these areas are likely to display similar activity patterns during attempted speech production, as would occur for locked-in patients. In summary, Brain-To-Speech could provide a voice and a natural means of conversation for paralyzed patients. We had the chance to talk with Christian about his BCI Award 2018 submission at the BCI Meeting 2018 in Asilomar.

g.tec: Christian, what’s your background and where do you come from? Could you also tell me a bit more about your BCI Award 2018 submission?

Christian: “I am a computer scientist and I am currently working at the University of Bremen, but I am going to switch to the University of Maastricht very soon. I work in Brain-Computer Interfaces based on speech processes. We have been focusing on automatic speech recognition from brain signals for a while. But we present something very new in this poster. This time, instead of writing down what a person was saying, we try to synthesize the speech directly from the brain signals. So, from the measured brain signals, we directly output speech as an audio waveform.”

You are currently viewing a placeholder content from Youtube. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

g.tec: And did you manage it?

Christian: “Yes, we did. I have some listening tests with me, so you could listen later if you like to experience firsthand how well it sounds right here. But, of course, we currently need implanted electrodes for that.”

g.tec: Would you describe the implantation as the biggest challenge?

Christian: “Well, I mean, this is very lab environment, with many challenges. Prior work with speech is not continuous, it involves single words that people read out, so continuous speech is one of the bigger challenges. But, there are still a lot of other challenges to be solved. We were quite sure that we could reconstruct some aspects of speech, but we didn’t even suspect that our results would actually be such high quality that you can understand the speech that we reconstruct from neural signals – that was quite flabbergasting to us, and we liked that a lot.”

g.tec: How do you want your research to continue in the future?

Christian: “I think the most important step is to close the loop, so this is offline analysis. We recorded data and then went back to our lab and tried to do the synthesis, but if we can close the loop and have a patient directly synthesize the speech from their brain, that would be perfect. So that is our next step.”

Picture: Brendan Allison, Christoph Guger, Kai Miller, Christian Herff, Sharlene Flesher, Vivek Prabhakaran at the BCI Award Ceremony in Asilomar, 2018.

g.tec: Do you know Stephanie Martin from EPFL in Switzerland? She won the 2nd place of the BCI Award 2017 with her work “Decoding Inner Speech”.

Christian: “Of course, I know her very well. I last met her in San Francisco, just two weeks ago. Her work is fantastic.”

g.tec: You both work on decoding speech. How does your research differ from her research?

Christian: “Well, Stephanie discriminated between two words, but in imagined speech. In her earlier work, she was able to reconstruct spectral features of speech, which was an outstanding paper. We go one step further because, from those spectral features, we reconstruct an audio waveform from that. So that is taking what she has learned to the next step, I think.”

g.tec: Which patients could benefit from this research?

Christian: “I think an implantation will be necessary for quite some time, so the condition of the patient would have to be quite severe. Because they would be willing to have an implantation, obviously locked-in patients would benefit. But I also think cerebral palsy patients who can‘t control their speech articulators but are of normal IQ could greatly profit from this. And I have talked to some of them and they are willing to get implantations if the device is good enough.”

g.tec: Do you think implantation technologies could be realized in three years?

Christian: “Well, I do not see it in three years, but maybe in the next decade. I do not think this is too far of any more.”

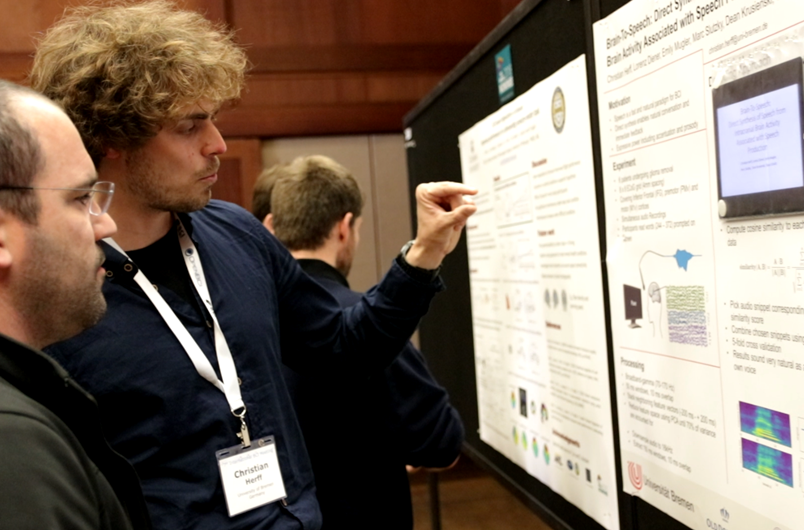

Picture: Christian Herff presenting his BCI Award 2018 submission at the BCI Conference 2018 in Asilomar.

g.tec: You are presenting your BCI Award submission at the BCI Conference 2018 in Asilomar here. What can I see on your poster?

Christian: “Sure, let me fast forward it so we can start from the front. So, what we did, we recorded activity while people were speaking. Then, for one new test phrase, we compared each bit of data to all the data we have in the training data, and then we picked the bit that was the most similar to the new bit and took the corresponding audio snippet. And we did that for each interval in our test data. So, for example, for the second bit, it is most similar to the “a” in “Asian” and for the next bit, the best match is the “v” in “cave”. Then we used some signal processing to glue those snippets of audio together to reconstruct “pave,” which was the word a person was saying. So, it is actually a very simple pattern matching approach, but it gives us really nice audio.”

Read more: www.bci-award.com